ChatGPT and Home Assistant

Expanding Home Assistant's capabilities using ChatGPT's code generation

I have a smart house and I'm loving it. It's based on Home Assistant. I also love natural langage prompting, whether by voice or by typing. It feels great when the machine can just grab my thoughts and make it happen instead of me having to be very specific and find the right buttons to make things happen. I imagine something like that:

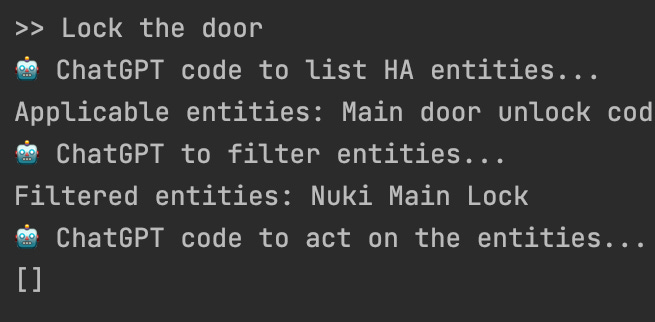

>> Lock the door

Door locked successfully

Spoiler: it worked with ChatGPT, and here’s the code repo

Home Assistant’s Assist

Home Assistant’s community has actually already built, and is still building, a rich ecosystem of integrations for using natural language to control your house. It’s called Assist and it is mainly focused on voice assistant. However, Assist does have a textual prompt where you can chat with it.

So, I just started out with the Assist prompt:

Now, that’s a rather disappointing answer given the rich ecosystem that the amazing community of Home Assistant is building.

LLMs

It’s 2024 now, and we have very strong large language models (LLMs) such as OpenAI's ChatGPT, Google's Bard and others that are capable of general-purpose language understanding and generation. Those highly capable models should probably be able to understand such a simple request in a rather narrow context:

The user have a door and he wants it locked.

These are all of of his available devices.

He’s using Home Assistant.

How can LLMs control things?

First of all, we need to unserstand how these models can interact with the real world other than just printing back textual answers. Well, there are actually more than one answer for that. OpenAI has been active on providing creative answers to this question with two new ways during 2023:

Function calling - which was adopted to Home Assistant in a project called Extended OpenAI Conversation.

ChatGPT plugins - that was experimented and discussed on that reddit thread.

And of-course, the good old fashion, ask the LLM to generate code, and just run it. I’ll add a few words on why I prefer this last approach at the end of this post.

Challenges

You could be thinking, yeah, just give it all to ChatGPT and he’ll chew it all and make the magic. Well, it’s not that far from true and that’s why generative AI is so very cool. However, there are some basic challenges:

Entity (device) resolution - ChatGPT doesn’t know the list of all of my devices that are capable of performing the tast. It has to get it somehow.

Code extraction and execution - ChatGPT does not only provide a code as an answer. Instead, it adds explanations around it. We should remove the explanations from the answer, execute the code, and perhaps use the execution results.

Generality vs. Specificity - On one hand, the more freedom we give ChatGPT (or any worker), the more creative and general he can be with the solution. But on the other hand, too much freedom and it makes mistakes that are hard to handle.

Solutions

Entity (device) resolution

We’ve already established that we’re going to ask ChatGPT to generate code and run it. If so, then how about we add a step to the plan, in which we use ChatGPT to find the right entity?

Going back to our example of:

Lock the door

We can just plug the request into a prompt and ask ChatGPT something like:

Given that the user wants to “Lock the door”, list all devices that could be relevant

By doing so, we can first get from Home Assistant to list of relevant devices. Only once we have those devices, we’ll ask ChatGPT to generate the code that actually act on those devices.

In the code, look for PROMPT_MASK_LIST_ENTITIES:

Write Python function "list_entities()" that returns a list that can perform this action…

Utilizing the LLM’s general knowledge

Specifically in my setup, my door lock’s name is not called door and doesn’t have any attribute the can tell it’s a door lock. However, it is called Nuki. Which is a brand name for smart door locks. So, we can utilize the general knowledge the LLM has to better match the relevant devices.

In the code, look for PROMPT_MASK_SELECT_ENTITIES:

Which of the following entities is or are the best to perform this request?

Code extraction and execution

ChatGPT does not only provide a code as an answer. Instead, it adds explanation around it. However, for this use case, we don’t need the explanation. Just the code.

I tried this tip from StackOverflow of instructing ChatGPT not to include any explanations in the response. But it doesn’t consistently work on ChatGPT. No pretty magic here. Just used the fact that ChatGPT always surround the code with 3 ticks:

```

this_is_code()

```And wrote a regular expression to extract it.

Once extracted, Python, being an interpreted language, can dynamically load the code and execute it.

Generality vs. Specificity

In this reddit discussion, there was a funny, yet very interesting question:

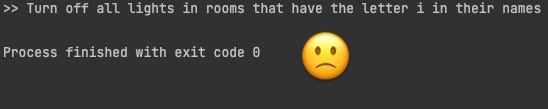

Could you ask it to do weird requests like: "Turn on the lights in rooms that contain the letter I"?

This question seems like a joke at first glance. But it actually encapsulates the extensive potential of LLMs. It is not bound to the engineering of the specific system that it is interacting with. It can build on wider range of concepts - whatever it learned. Perhaps a more useful example would be:

Turn on the light, wait 10 seconds, then turn off the light.

Historically, voice assistants such as Amazon’s Alexa, Apple’s Siri and other language models where more specific in language understanding. They are extractive models. They would look for the subject and verb of such instructions, resolve the machine instruction and device based on some mapping, and run it.

That historical approach would not be able to achieve more complicated requests such as the two above. And indeed, we are expected to see voice assistants such as Amazon’s Alexa, Apple’s Siri adopting to the generative AI approach soon. I’d guess it will happen during 2024.

On the other hand, LLMs are still not that good with generating long and complicated code as they tend to write bugs. Just like humans. So, we really need to find a balance here.

How general is it?

My attempts failed at the “rooms that contain the letter I” test on ChatGPT-3.5. I was not able to query the API twice, as need to implement this request. However, when I tried the same prompt on ChatGPT-4, it was able to perform the required multiple API calls.

With the “Turn on the light, wait 10 seconds, then turn off the light” ChatGPT-3.5 did a little better. Still generated failing code. But it was much closer to run:

Flow design

To balance between short tasks (specificity) and leaving enough room for the user to be creative and ChatGPT to handle cases that I did not think about (generality), I went with the next flow:

Ask ChatGPT to generate code that lists all devices capable of doing what the user asked for.

Execute the code and get the list of devices.

Ask ChatGPT which of those devices best match the user request. This is where the LLM’s general knowledge comes in handy.

Ask ChatGPT to generate code that acts on the devices.

Execute the code.

Prompt engineering

This part requires some trial and error. However, there’re basic building blocks that a good prompt should have. You can find a few such tutorials online. Here’s what I basically did after few iterations:

Context

Given a Home Assistant server, a user wants to “Lock the door“,

Task

Write a Python function

list_entities()that returns a list of entities that can perform this action.Instructions

The list should contain only the original objects returned from the API.

Consider mainly entity_id, friendly name and state.

…

You can make more than one API call if you need to retrieve more data

Why not use ChatGPT’s dedicated APIs?

The 2 mentioned solutions, Function calling and plugin, are, as of today, very ChatGPT specific. Besides, they do not allow much verification on the interaction between ChatGPT and the smart house. Why is verification important?

Safety - You don’t want ChatGPT turning other devices on/off because that’s annoying and frustrating. Being honest, they that’s not really avoidable, but verification before execution can improve the problem.

Verbosity - You want to be able to re-iterate and fix your prompts based on the responses. So, having a mid-step of: “that’s what we plan to do” is very useful for pinpointing what is unclear and fixing the prompt accordingly. It shed light on what exactly the LLM did not understand or was missing from the prompt.

Besides, those methods are not easily replaceable with local LLMs such as Meta’s Llama 2 or other. Using those approaches actually require you to allow access to your smart home from the open internet. Which you can actually do in a rather secured manner.

The final result

Here’s the code repo on GitHub. Less than 150 lines. At the heart of it are the 3 prompts:

PROMPT_MASK_LIST_ENTITIES = 'Given the env vars HOME_ASSISTANT…’

'Write Python function "list_entities()" that returns a list… '

….

PROMPT_MASK_SELECT_ENTITIES = 'A user asked to {command}. ' \

'Which entities is or are the best to perform this request?'

….

PROMPT_DO_THE_COMMAND = 'Given the env vars HOME_ASSISTANT_…’

'Write Python function "call_entity()" to do that specifically'

….

It runs kind-of-ok. You just set the required environment variables, type your command in the code, and it does the magic. However, many times the code that ChatGPT returns contains a bug. I bet the next versions of ChatGPT and its competitors would do much better.